The automated decision-making processes of self-driving cars are ill equipped to protect that safety of people of color in public.

"If you’re a person with dark skin, you may be more likely than your white friends to get hit by a self-driving car," reports Sigal Samuel.

That's the finding of a new study [pdf] by researchers at the Georgia Institute of Technology report that analyzed autonomous vehicles footage from New York City, San Francisco, Berkeley, and San Jose. Samuel explains the study in more detail:

The authors of the study started out with a simple question: How accurately do state-of-the-art object-detection models, like those used by self-driving cars, detect people from different demographic groups? To find out, they looked at a large dataset of images that contain pedestrians. They divided up the people using the Fitzpatrick scale, a system for classifying human skin tones from light to dark.

The researchers then analyzed how often the models correctly detected the presence of people in the light-skinned group versus how often they got it right with people in the dark-skinned group.

The results of the study are concerning, to say the least.

Detection was five percentage points less accurate, on average, for the dark-skinned group. That disparity persisted even when researchers controlled for variables like the time of day in images or the occasionally obstructed view of pedestrians.

Samuel ties the problem to the record of algorithmic bias—human bias influencing the results of automated decision-making systems. The human failure behind the machine's failure also implies potential solutions to the problem.

FULL STORY: A new study finds a potential risk with self-driving cars: failure to detect dark-skinned pedestrians

Planetizen Federal Action Tracker

A weekly monitor of how Trump’s orders and actions are impacting planners and planning in America.

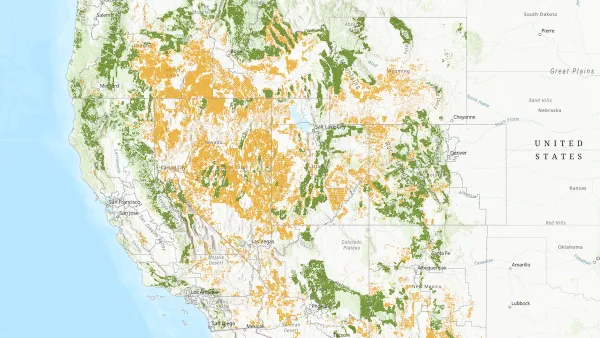

Map: Where Senate Republicans Want to Sell Your Public Lands

For public land advocates, the Senate Republicans’ proposal to sell millions of acres of public land in the West is “the biggest fight of their careers.”

Restaurant Patios Were a Pandemic Win — Why Were They so Hard to Keep?

Social distancing requirements and changes in travel patterns prompted cities to pilot new uses for street and sidewalk space. Then it got complicated.

Platform Pilsner: Vancouver Transit Agency Releases... a Beer?

TransLink will receive a portion of every sale of the four-pack.

Toronto Weighs Cheaper Transit, Parking Hikes for Major Events

Special event rates would take effect during large festivals, sports games and concerts to ‘discourage driving, manage congestion and free up space for transit.”

Berlin to Consider Car-Free Zone Larger Than Manhattan

The area bound by the 22-mile Ringbahn would still allow 12 uses of a private automobile per year per person, and several other exemptions.

Urban Design for Planners 1: Software Tools

This six-course series explores essential urban design concepts using open source software and equips planners with the tools they need to participate fully in the urban design process.

Planning for Universal Design

Learn the tools for implementing Universal Design in planning regulations.

Heyer Gruel & Associates PA

JM Goldson LLC

Custer County Colorado

City of Camden Redevelopment Agency

City of Astoria

Transportation Research & Education Center (TREC) at Portland State University

Camden Redevelopment Agency

City of Claremont

Municipality of Princeton (NJ)